Who is this certification for?

This certification is for those who implement security measures in Azure. Unlike an architect certification, where a lot of the knowledge required is about planning and designing, the security engineer cert is more about getting in with the nuts and bolts of security.

We can think of someone who has gained the required knowledge to pass this exam can then be able to deploy and monitor in areas such as implementing security controls and set up identity and access permissions. Additionally, they will be able to safeguard data, applications, and networks across Azure, multi-cloud, and hybrid environments.

This exam and resulting qualification could therefore be described a security focused equivalent to the Azure Administrator certification.

Exam requirements

To obtain the Azure Security Engineer Associate certification, only one exam, AZ-500 is required. There are no prerequisites for taking the AZ-500 but if you haven’t already, passing AZ-900 and AZ-104 before attempting AZ-500 will give you a solid foundation and lot of confidence in knowing where to navigate to find features relevant to security posture.

Topics covered

The headline skills that make up the AZ-500 of expected knowledge areas are manage identity and access, secure networking, manage security operations and secure compute, storage, and databases. Each of them are roughly weighted around one quarter of the exam each. Let’s dive into each one individually and see what you can expect to see.

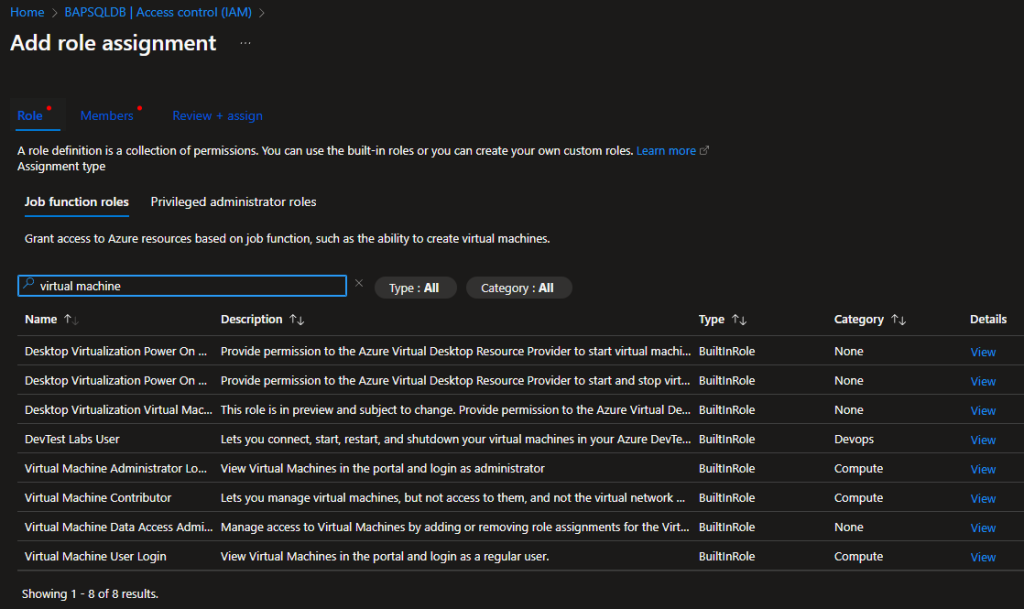

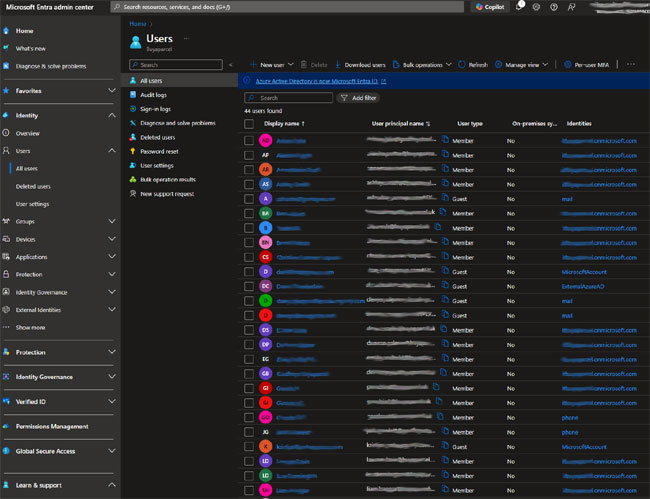

The manage identity and access topic unsurprisingly covers the various features and functionality of Microsoft Entra ID (formally Azure Active Directory). There is a section on managing identities which covers users management, groups, leveraging external identities and implementing Microsoft Entra Identity protection. The next section covered is manage authentication by using Microsoft Entra ID which includes the two methods for working with Active Directory identities – Entra connect and Entra cloud sync. This part also covers the methods used to authenticate the credentials between AD domain and an Entra tenant, namely password hash synchronisation, pass through authentication and Federation. The remainder of this important part covers technologies such as MFA, passwordless authentication, password protection, Entra ID single sign-on (SSO), Microsoft Entra Verified ID and modern authentication protocols. The final section of manage identity and access is Manage application access in Microsoft Entra ID – centred around Entra ID app registration, managed identities and service principals. This section, and the topic itself is concluded with Microsoft Entra Application Proxy.

The next topic in the AZ-500 learning path is a favourite of mine, networking. This is networking features in Azure with an emphasis on security. It is broken into 3 sections, the first of which is plan and implement security for virtual networks. For this part of the syllabus, the candidate is expected to know about Azure Virtual Networks, with a focus on Network Security Groups (NSGs), Application Security Groups (ASGs), User-Defined Routes (UDRs), Virtual Network peering, VPN gateways, Virtual WAN and ExpressRoute which includes demonstrating how to encrypt traffic over an ER circuit. This section is concluded with configuring firewall settings on PaaS resources and a describing each of the network monitoring and diagnostic tools and their use case. Next up is Plan and implement security for private access to Azure resources where we are looking at services including service endpoints, private link and private endpoints. Then the module looks at network integration for Azure App Service and Azure Functions before going on to look at network security configurations for an App Service Environment (ASE) and for Azure SQL Managed Instances. The subject of the final networking module is plan and implement security for public access to Azure resources. For this, we start with implementing Transport Layer Security (TLS) to applications, including Azure App Service and API Management followed by Azure Firewall, Azure Firewall Manager and firewall policies. The remainder of this module comprises of many of the Azure public facing load balancers and supporting services including Azure Application Gateway (including web application firewall (WAF) and Azure Front Door, (including Content Delivery Network (CDN). This module and networking as a whole, concludes by covering Azure DDoS Protection Standard.

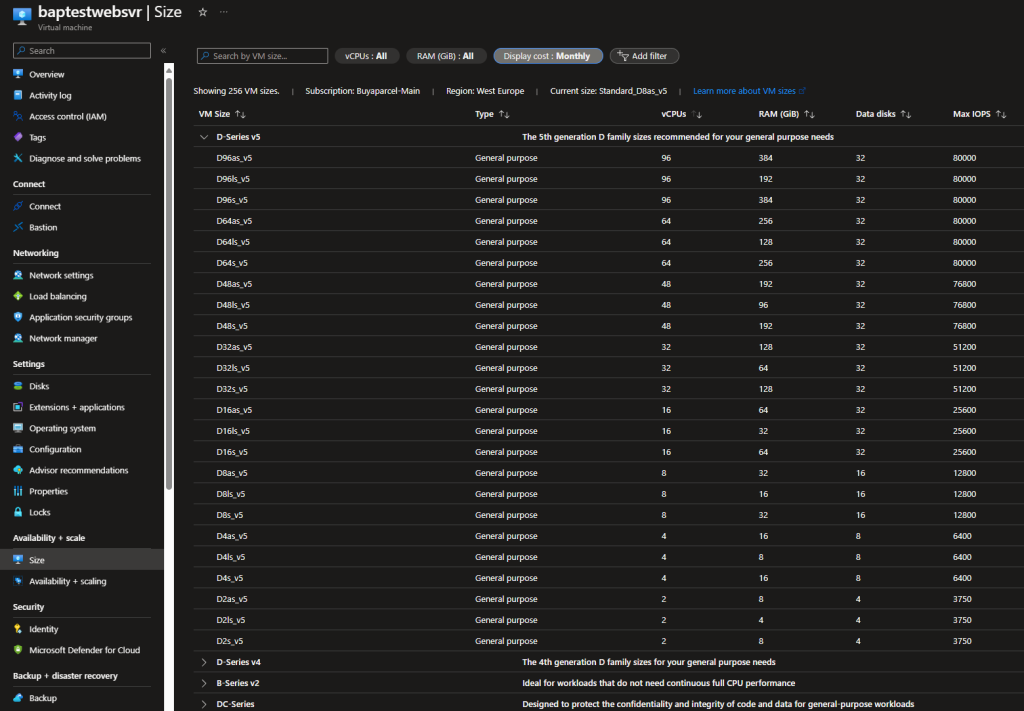

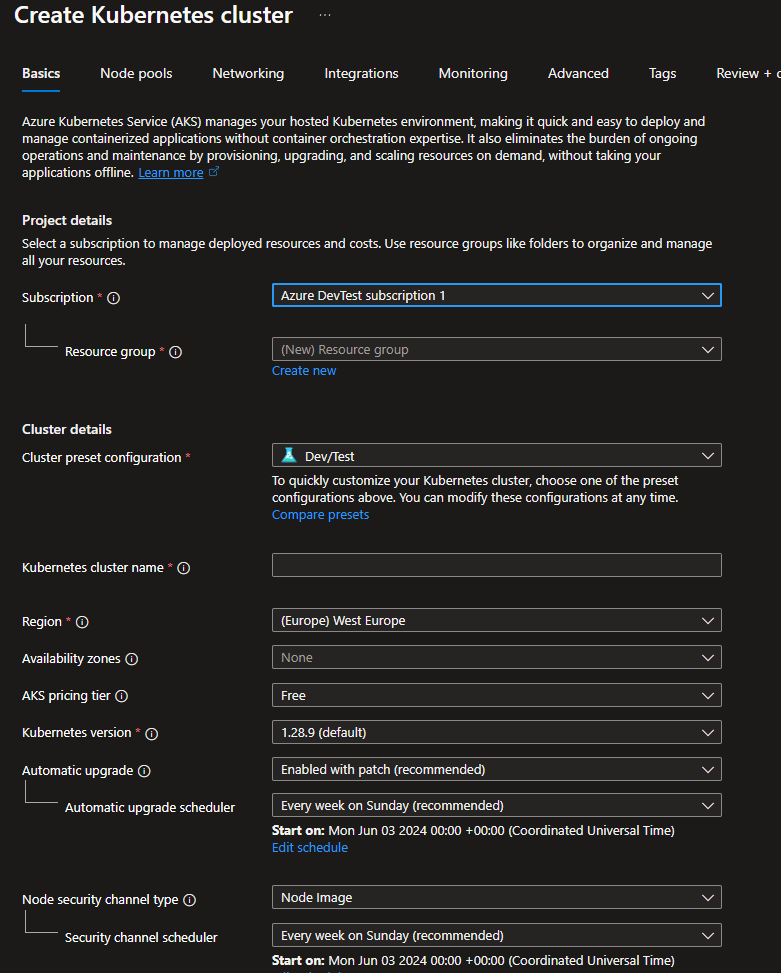

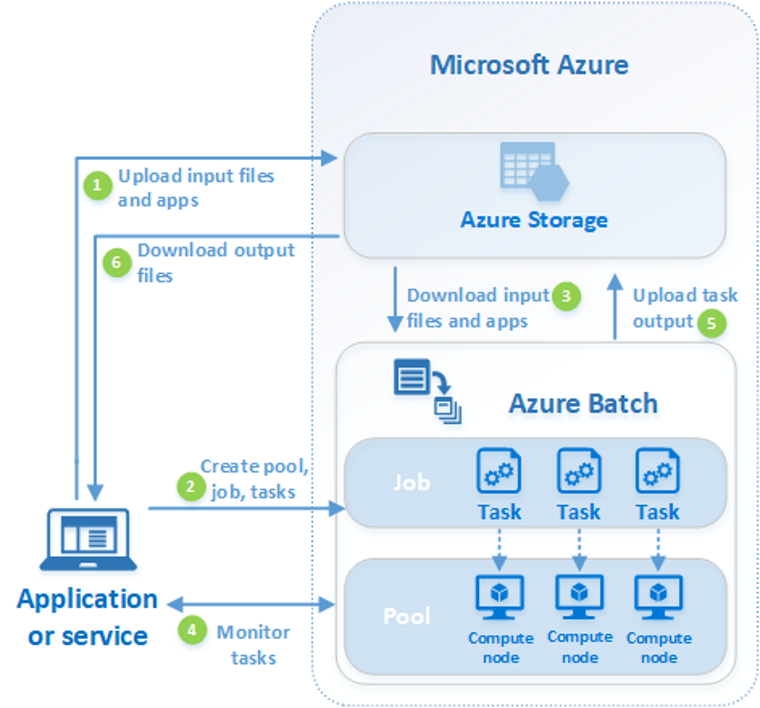

The penultimate topic is Secure compute, storage, and databases and begins with a module entitled Plan and implement advanced security for compute. This contains security best practice for many Azure compute services. It discusses Azure Bastion and just-in-time (JIT) virtual machine (VM) access and then moves onto network isolation for Azure Kubernetes Service (AKS). Then there is coverage of securing Azure Kubernetes Service (AKS), Azure Container Instances (ACIs), Azure Container Apps (ACAs) and Azure Container Registry (ACR). The module is concluded with Azure Disk Encryption (ADE) and recommend security configurations for Azure API Management. The next module is Plan and implement security for storage. For this section, it describes securing the storage account itself, including account keys. Then it covers off selecting and configure an appropriate method for access to Azure files, blobs, tables and queues. Thereafter, the syllabus moves to methods for protecting against data security threats, including soft delete, backups, versioning and immutable storage followed by requiring the candidate has knowledge on brining your own key (BYOK). The storge section is concluded with enabling double encryption at the Azure Storage infrastructure level. Plan and implement security for Azure SQL Database and Azure SQL Managed Instance is the module that covers authentication, monitoring and auditing, some light coverage of Purview and wraps up with some key SQL DB security features; dynamic mask, transparent data encryption and Always Encrypted.

Manage security operations concludes the topic headers for the current Microsoft Azure Security Engineer Associate syllabus. And to kick this off; the Plan, implement, and manage governance for security section begins with what is Azure governance, then covers core Azure services that provide guardrails against would-be compromised security posture. These include Azure Policy and Initiatives, Azure Blueprints, Azure Landing Zones and the largest topic for this part- Azure Key Vault. The second module is Manage security posture by using Microsoft Defender for Cloud. This gives us a high level overview of Defender for Cloud concepts such as secure score, adding industry and regulatory standards, custom initiatives, connecting hybrid cloud and multicloud environments and External Attack Surface Management (Defender EASM). If you made it this far, you have done well – just two more modules to go, starting with Configure and manage threat protection by using Microsoft Defender for Cloud. This one is a long one because of all the various components that make up Defender for cloud. They include enabling workload protection services, configuring Defender for servers and Defender for Azure SQL Database. A large part of this module pertains to setting up container security in Defender before moving on to sections that focus on Microsoft Defender Vulnerability Management, Defender for Storage, DevOps and GitHub security then concluding with security alerts, automation and evaluating vulnerability scans from Microsoft Defender for Server. The final module is configure and manage security monitoring and automation solutions which begins with Monitor security events by using Azure Monitor and concludes with the setup, alerting and automation of Microsoft Sentinel.

Exam hints and tips

Its worth knowing, that unlike many other tests you can take from any number of vendors, Microsoft exams are not there to trip you up. There are no trick questions so always go with the obvious answer, taking into account all parameters in the question. Whilst there are no trick questions, if you misread or skip a part of the question, this could alter what you think the answer is.

If you are new to cybersecurity or at least in the context of Azure and the Microsoft ecosystem, consider studying for and sitting the SC-900, Microsoft Certified: Security, Compliance, and Identity Fundamentals exam to ease you into this path. It gives a solid overview, builds confidence and if you take the exam and pass, you will have another certification to your name.

Microsoft exams test a candidate on services that are GA (generally available). They do not (should not) test on things that are in public or private preview. However, there have been a few exceptions to this rule where a product hasn’t technically left public preview but is a de facto solution now.

Be sure to check out more tips on the other certification posts. You can access them via the post archive.

Recommended resources

To start, please ensure you read through all the resources linked on the official Microsoft specific AZ-500 course.

You maybe unsurprised to know, that for excellent video learning, I will point you to John Savill’s AZ-500 playlist, which includes one of his famous crams, in this case, the AZ-500 study cram.

Image Credit: Microsoft

Be sure to try some of the security focused Microsoft Applied Skills. These lab based assessments will give you practical skills to solve security challenges you may encounter in real world scenarios.

Next steps

If you are working in cybersecurity or want to demonstrate a deeper knowledge of security matters that relate to the Microsoft stack, then consider the Microsoft Certified: Cybersecurity Architect Expert certification. This expert level exam will focus on designing the security infrastructure the engineers would roll out and maintain.

To obtain the Microsoft Certified: Cybersecurity Architect Expert cert, you need to pass the SC-100 exam plus one of the following:

Microsoft Certified: Azure Security Engineer Associate (exam AZ-500)

Microsoft Certified: Identity and Access Administrator Associate (exam SC-300)

Microsoft Certified: Security Operations Analyst Associate (SC-200)

You can take the SC-100 and AZ-500 for example in either order and once you have both, you will obtain the Microsoft Certified: Cybersecurity Architect Expert badge!