Microsoft describes this certification on the certification page as follows: This certification validates your foundational knowledge of cloud concepts in general and Azure in particular. As a candidate for this certification, you can describe Azure architectural components and Azure services, such as compute, networking, and storage, as well as features and tools to secure, govern, and administer Azure.

An excellent introduction. Let’s look at this certification in more detail.

Who is this certification for?

When thinking about the list of who should have this certification, it would be easier to list who it isn’t for. It really is for practically anyone. Let’s list some examples:

The most obvious is someone starting in the cloud; either as a speciality or as part of a wider IT learning strategy. Whilst this is a Microsoft specific certification with a focus on Azure, a large part of it covers a lot of cloud concepts in general such as Capex vs Opex, IaaS, PaaS, SaaS, shared responsibility and so on.

Another ideal candidate for the Azure Fundamentals certification would be someone who is migrating from another cloud provider or is looking to become a muti-cloud professional. Whilst this individual will know, and be able to skip the general cloud terms sections, the Azure Fundamentals learning path covers a broad spectrum of Azure products and services and will be the quickest route to getting an overview before deciding what areas to dig deeper next.

An individual who works with Azure for their job but only at a high level would find the Azure Fundamentals learning path useful from a context and overall understanding of the technology point of view. Consider a project manager or an executive who are leading teams in delivering solutions for the company. Whilst they may not be deploying services in the cloud themselves, for discussions around choosing an approach to a task or getting project updates from their team, its good to be across the terminology and capabilities used in the cloud. Whether its containers vs IaaS or virtual disks vs blob storage, knowing the differences will help the organisation become more agile.

Exam requirements

To obtain the Microsoft Azure Fundamentals certification, you must pass a single exam, AZ-900: Microsoft Azure Fundamentals. There are no prerequisite certification requirements, so this is a great standalone certification to start your Azure journey. Microsoft Fundamentals certifications have no expiry, so they won’t require a yearly renewal unlike associate, specialist and expert level certifications which do.

Topics covered

The learning path (exam objectives) are often changed and updated, but at the time of writing, Microsoft has wrapped the “stuff to know” into 3 main areas.

Describe cloud concepts, as the name suggests, is general cloud terminology, not specific to any particular cloud vendor. It walks through the benefits of cloud over on premises solutions (TL:DR – most organisation’s own facilities will not be able to achieve anything like the security, reliability, manageability and scalability that a public cloud can).

After studying this section, the student will be able to understand the foundation of cloud computing including the shared responsibility model, consumption-based resources, how the cloud can bring reliability, elasticity and security to workloads. The describe cloud concepts section concludes with understanding the pros and cons of the cloud service types, which are Infrastructure-as-a-service (IaaS), Platform-as-a-Service (PaaS) and Software-as-a-service (SaaS). There are others such as FaaS, DaaS, CaaS etc. but only the broad ones are covered in this learning path.

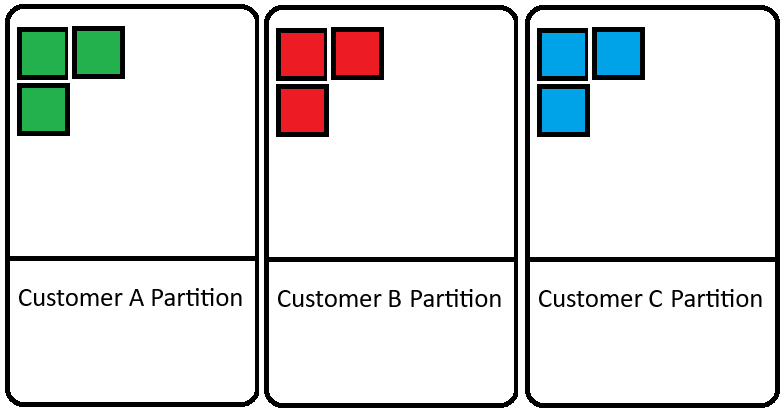

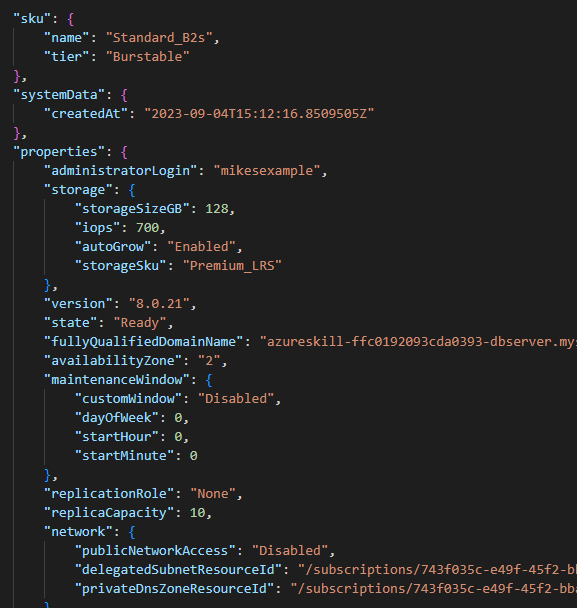

The next section is describe Azure architecture and services. For me, this is the most enjoyable part of the learning experience because we are now looking at actual products and solutions to host your workloads on the Azure cloud. Beginning with the core architectural components, you will learn about user accounts, management infrastructure and how a resource is created.

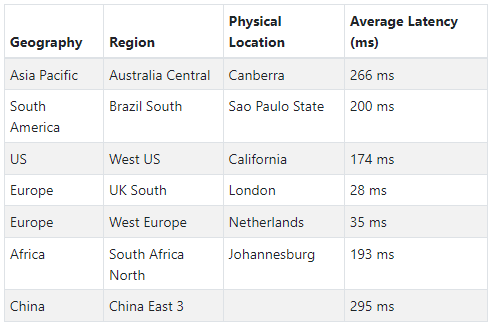

Then we look at the compute and networking services which are at the heart of just about any Azure subscription. From Virtual Machines (VMs) which are often used for lift-and-shift migrations from on prem to cloud, through to more cloud native solutions such as containers and function apps. Virtual networks, DNS and connectivity options are covered to begin understanding how software defined networking is woven into the cloud architecture. Azure storage is covered, looking at a core component, the storage account, and more details about storage such as various options for varying levels of redundancy and cost, depending on the requirements. Storage is wrapped up with data movement and migration services.

The architecture and services section is concluded by ensuring you have an understanding of identity, access, and security. The most important subject here in relation to understanding the cloud vs the traditional on premises model is the zero-trust model; with devices and users potentially working from many locations around the globe, security is no longer considered at the edge of the office’s firewall but is built around identity. This part covers Azure specific services related to security, including Azure Active Directory (now called Entra ID), external identity management, conditional access and role-based access control (RBAC) which sets the permissions for users, groups, apps or service principals.

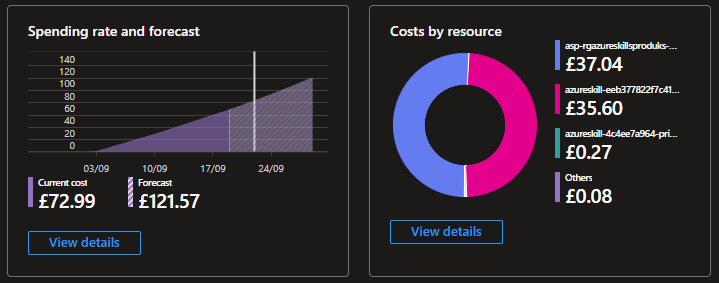

Describe Azure management and governance is the third and final section of the AZ-900 syllabus and it covers a lot of ground. A large section is dedicated to knowing how to get on top of costs. In an Opex / consumption model, it is particularly important to be on top of costs. As well as learning about factors that affect cost such as resource type and geography, there are some tools specifically to look at costs. Pricing Calculator and Total cost of ownership (TCO) calculator are discussed here. When looking at governance and compliance features, the focus is on Azure Policy and resource locks for creating guardrails on your Azure tenant. For a focus on data, Microsoft Purview is for finding and classifying data from multiple storage areas such as M365, Azure storage or another cloud provider. The material continues to the Service Trust Portal which is a resource covering many standards of compliance in relation to Azure such as ISO, GDPR and PCI. For the deployment topic, the learning path relates to ways to interact with Azure, namely the Azure Portal, PowerShell and CLI. Azure Arc is for managing on premises and other cloud provider resources within Azure, and ARM Templates and Bicep are Infrastructure as Code (IoC) solutions which offers repeatable, predictable results when deploying resources and reducing the chance of human error when deploying resources manually. And for the last module in the Azure management and governance learning, we focus on monitoring tools, namely Azure Advisor, Azure Monitor and Azure Service Health.

So you see there is a very broad spectrum of topics, but don’t be discouraged. Questions in the exam will relate to high level matters such as what service is used for going through your data and classifying any personally identifiable information or what would you use to ensure resources are only deployed in the US East region? You will not be asked how you go about writing an ARM template for example.

Exam hints and tips

First tip is don’t be fooled by the word Fundamentals. Whilst it should be a relatively straightforward exam to pass compared with associate, speciality or expert level certifications, if you haven’t studied all the subjects covered in the learning path, you may not pass. The exam is fairly broad, so you need to keep a fair amount in your head.

Microsoft have now made all role-based exams “open book”, meaning you can access Microsoft Learn content during the exam, but not for fundamental level exams. So you can’t look anything up and will need to rely on your obtained knowledge only for the exam.

A great tip from Tim Warner for Microsoft exams in general is always complete all the questions. Even if its a guess, you may get it right – you will definitely get it wrong if you don’t answer. There are no negative points for a wrong answer and some multi part questions such as “pick 3”, will give you some points for a partially correct answer.

Exams can be taken at a test centre or at a place of your choosing via a webcam enabled computer. If you have never taken an exam before, do try the Microsoft exam sandbox, which gives you an interactive experience of what to expect and the format the questions could be served. You have enough to think about in terms of using your obtained knowledge to achieve exam success, so you want to be as comfortable about the exam nuts and bolts as possible.

Read the question carefully. Another general tip is don’t lose points by making silly mistakes. Obvious things would be mixing up “which solution would not” and reading “which solution would” for example.

Recommended resources

The first resource I will always recommend is following Microsoft’s own content on for the AZ-900 exam. Known as a collection, it takes a number of learning paths, with modules in each path that specifically follow the exam syllabus. The content is very good and there’s great reassurance in consuming everything provided for you by Microsoft themselves.

Next up is John Savill’s AZ-900 Azure Fundamentals Certification Course. John works for Microsoft and his John Savill’s Technical Training YouTube content is legendary. He does whiteboarding of the subjects along with practical demonstrations. The course is organised as a playlist and currently contains an amazing 65 videos (9 hours’ worth). I have paid for a lot of courses in my time, and John’s content is as good as, sometimes better than the courses provided by training providers. What’s more, John does it as a passion to help others, he doesn’t monetise the videos, despite them being as good as they are. If you are short on time, I think if you have already read through Microsoft’s content, John’s AZ-900 study cram on its own would likely get you over the line. However, if you have the time, I recommend watching all videos in the playlist. So much of the material will give you a solid base for continuing your learning journey after you pass the AZ-900 exam.

I took and passed my AZ-900 exam in December 2020. It was the first Microsoft certification I did and at the time John Savill’s AZ-900 videos did not exist. One of the best resources I used at the time was Adam Marczak’s Microsoft Azure Fundamentals (AZ-900) Full Course on YouTube as part of his Azure for Everyone channel. Adam is a Microsoft MVP and is a great communicator. The graphics he uses in his videos are brilliant and he got me over the line watching his videos. Some are several years old now but the regular video comments, thanking him for getting them through the exam shows his content is still relevant. Whilst Azure does develop at a fast pace, the concepts of cloud computing and the popular Azure services on a high level are still the same.

Next steps

Once you have passed your AZ-900 exam and have your Microsoft Certified: Azure Fundamentals badge, what’s next?

It all depends on the individual and what their goals are. If you only required an overview of cloud and Azure specifically, you could walk away proud you can demonstrate your knowledge at this level. If you want to progress further, then your choices will depend largely on your job role, job you are working towards or just where your interest lies.

However, if there is to be a next step that ticks many boxes, the Most popular choice must be Microsoft Certified: Azure Administrator Associate.

Two reasons for this. Firstly, after learning the concepts in Fundamentals, no doubt you will want to try Azure and maybe start to leverage it in your organisation. Working through Azure Administrator certification path should give you enough skills to deploy, secure and monitor a good number of the popular services in Azure.

Second, it opens a path to two expert level certifications; Microsoft Certified: Azure Solutions Architect Expert and Microsoft Certified: DevOps Engineer Expert*. Both certifications require a second exam to get the expert badge (AZ-305 and AZ-400 respectively), but the ability to potentially obtain two expert certifications via the AZ-104: Microsoft Azure Administrator exam has to be a great move for your personal development.

* You can also pass the AZ-204: Developing Solutions for Microsoft Azure exam alongside the AZ-400 to obtain the DevOps Engineer Expert certification instead of the AZ-104.