A core feature of any public cloud provider is compute. From heavyweight offerings like virtual machines through to serverless tech in the form of functions. Azure is no exception. In fact, the list of services for compute in Azure is extensive. Here we will explore those services, describing them, giving reasons why you might use a given solution and some use cases.

Virtual Machines

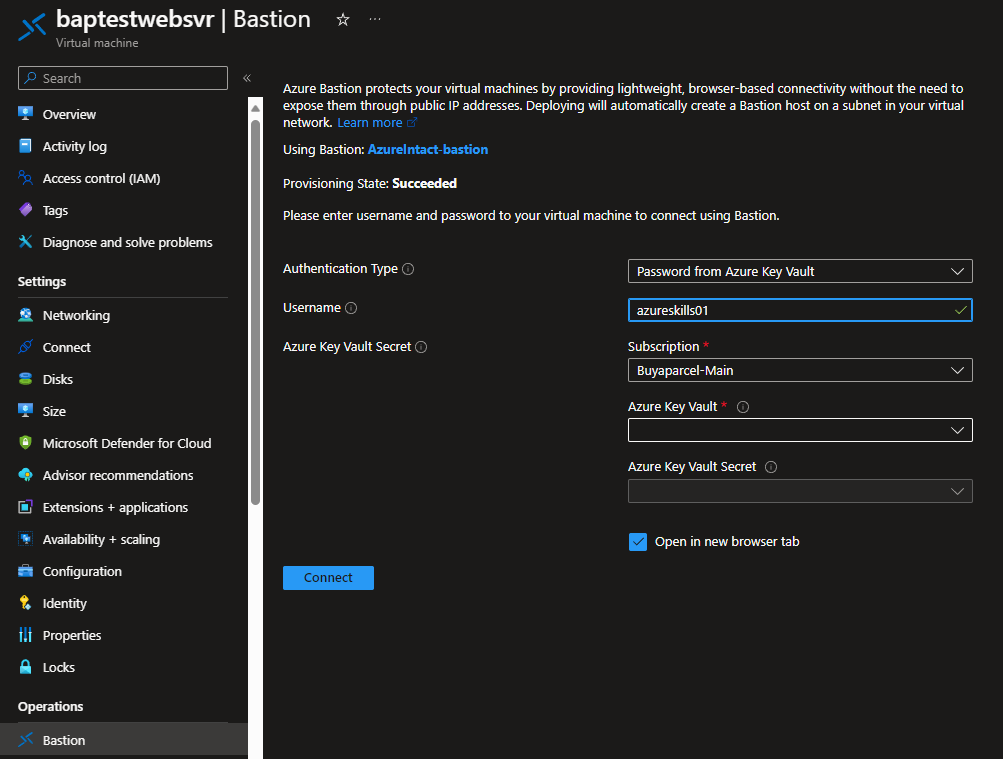

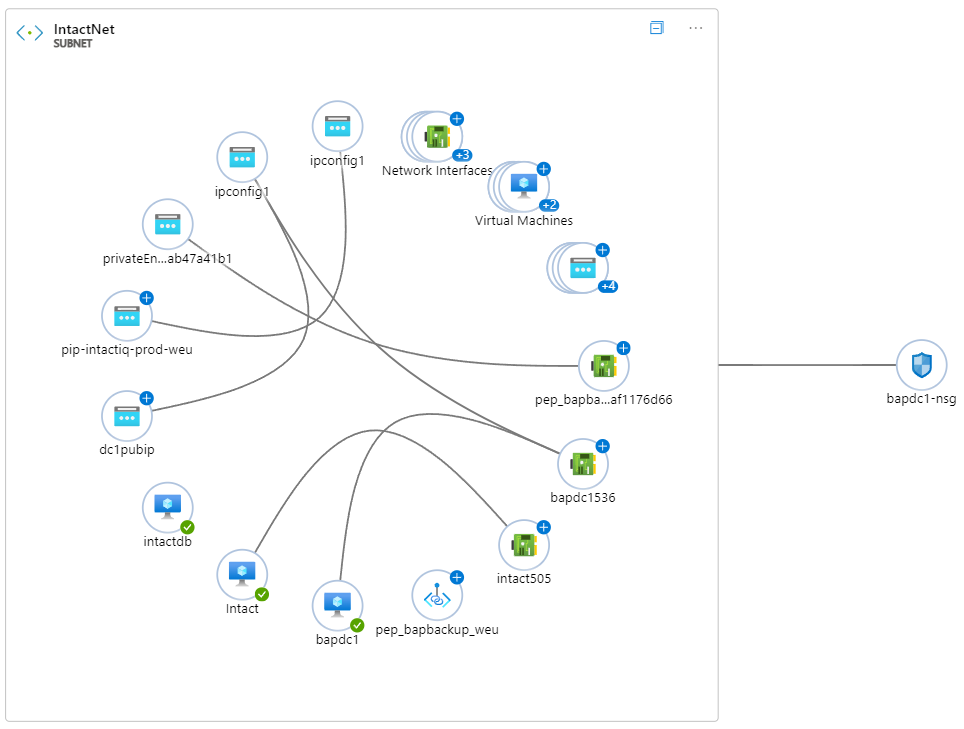

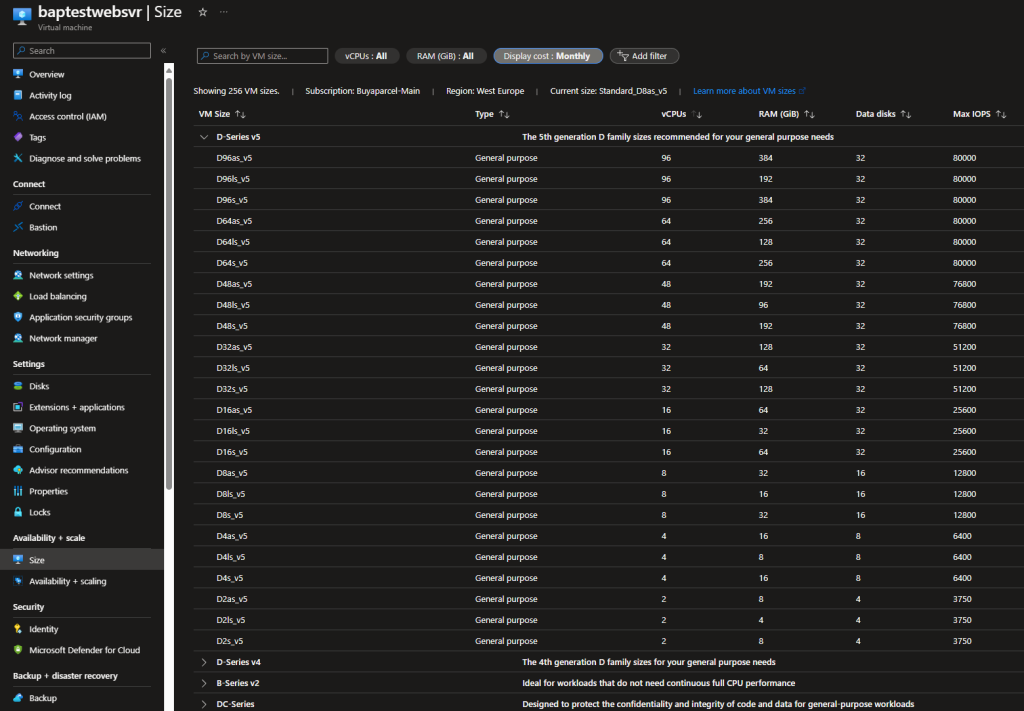

A great place to start is Virtual Machines. VMs have been the staple of on prem and co-lo solutions for many years. It was only natural that public clouds, Azure included would offer this. Falling under the category of infrastructure as a service (IaaS), a VM gives the administrator OS access (not the host OS, just the guest), meaning fine-grained environment settings can be made. However, with power comes responsibility – the administrator will be responsible for updating the OS and ensuring security settings are adequate.

You can run a variety of different Linux or Windows VMs from the Azure marketplace or using your own custom images. For those who have larger workloads, need to build in resiliency or have varying levels of traffic, Azure offers Virtual Machine Scale Sets (VMSS) which can be configured to create more VM instances based on metrics such as CPU use.

Choosing VMs as your compute solution could be driven by a desire to make the quickest migration from on prem to cloud (known as lift and shift), it could also be because of some legacy application that would be complex to move into a more cloud native solution.

Azure App Service

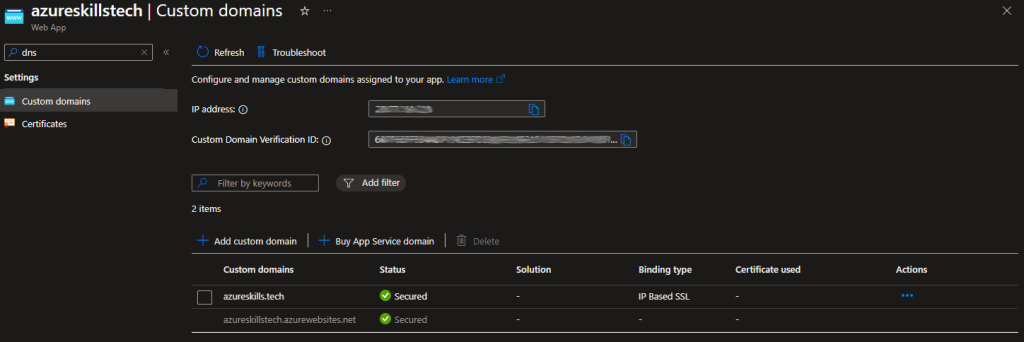

Azure app service allows you to host http(s) based services (websites and APIs) without the complexity of maintaining VMs or having to run docker images. This is a platform as a service (PaaS) that supports multiple programming languages including PHP, Java, Node.js and .net. With this service, you can run on Windows or Linux but all the OS is abstracted away and all you are left with is an environment to host and run your applications. There’s cool features like continuous integration and deployment with Azure DevOps, GitHub, BitBucket, Docker Hub or Azure Container Registry.

Organisations would use Azure app service to deliver website front ends, mobile backends or provide RESTful APIs for integration with other systems. By supporting multiple programming languages, has integration with Visual Studio and VS Code, and can work in DevOps pipelines, makes Azure app service popular and familiar for developers to use. Patching is done automatically and you can scale your application automatically to meet changing demands.

Azure Functions

Next up, we have Azure functions. Functions are what is known as a serverless application, in which code is run when a trigger such as a HTTP request, message queue, timer or event initiates it. This is perfect for short lived sporadic workloads because you are only charged during execution of your code on the back of trigger and not charged when the function is not running.

Azure functions supports multiple programming languages including Java, JavaScript, Python and C#. Generally these are stateless, meaning they hold no information from one trigger to the next, however there are durable functions which can retain information for future processing. Durable functions are an extension of Azure functions and have practical uses in various application patterns such as function chaining and fan out / fan in.

Functions generally play well for short-lived tasks. There is no need to stand up other compute services, only to have it sit idle for the majority of the time. Use cases include orchestrating some automation in a solution or initiating some data processing when new data becomes available. Generally, they form part of a larger, loosely coupled architecture, where services are independent allowing for modular development of a single part without affecting other parts. This enables resilience in the design, such as incorporating message queues, so if one part of the system becomes temporarily unavailable, the app as a whole can continue to run.

Azure Kubernetes Service

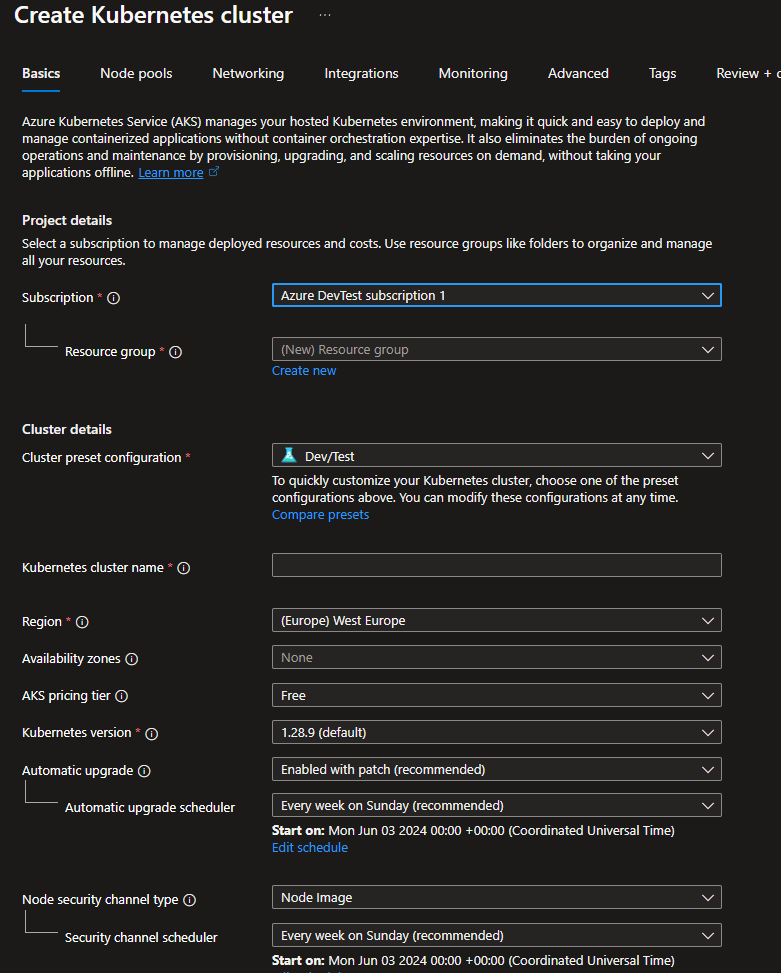

Kubernetes is a container orchestration system, originally designed by Google, it is now an open source solution that has become the de facto way to deploy, manage and scale containerised applications in the form of Kubernetes clusters. Kubernetes (also known as k8s) is an ideal solution for running apps using the microservices model.

Azure Kubernetes service (AKS), is Azure’s offering of Kubernetes in the cloud. By using AKS, it strips a lot of the control plane away and allows you to focus on deploying your application quickly. Being an Azure service, it plays nicely with other areas of Azure such as identity, networking, monitoring and more.

Almost any type of application could be run in containers, and when there’s a need to manage and monitor multiple containers for scale, security and load balancing, AKS provides these advantages. Essentially it is provisioning containerised microservices in a more sophisticated way than deploying and managing containers individually.

Azure Container Apps

For other container workloads that do not require all the features of Kubernetes, there is Azure Container Apps. This solution is a fully auto scaling solution, including scale down to zero instances, which makes this a serverless offering where required. You can auto scale based on HTTP traffic, CPU, memory load or event-based processing such as on-demand, scheduled, or event-driven jobs.

Under the hood, Azure Container Apps is powered by AKS but it is simplified so that deployment and management are a lot easier. When deploying your container, Azure Container Apps can create the TLS certificate, meaning you can use your application securely from the outset with no additional configuration. Dapr (Distributed Application Runtime) is also included in the service, allowing for ease of managing application states, secrets, inter-service invocation and more . With Azure Container Services, you can deploy in your Virtual network, giving you many options regarding routing, DNS and security.

Azure Container Apps are great when working on a multi-tiered project, such as a web front-end, a back-end, and a database tier in a microservices architecture.

Azure Container Instances

Another service for containers in Azure is Azure Container Instances (ACI). This service is much more basic than Azure Kubernetes Service or Azure Container Apps. Creating an instance is as simple and allows Docker containers in a managed, serverless cloud environment without the need to set up VMs, clusters, or orchestrators.

If using Linux containers, you can create a container group which allows multiple containers to sit on the same host machine. By adding to a group, the containers share lifecycle, resources, local network, and storage volumes. This is similar to a pod concept in Kubernetes.

Because ACI allows containers to be run in isolation, this suits batch or automation/middleware workloads which are not tightly coupled to other parts of the system. The removal of orchestration features makes it easy for anyone to quickly use containers in their projects. Ideal use cases could be running app build tasks or for doing some data transformation work.

Azure Batch

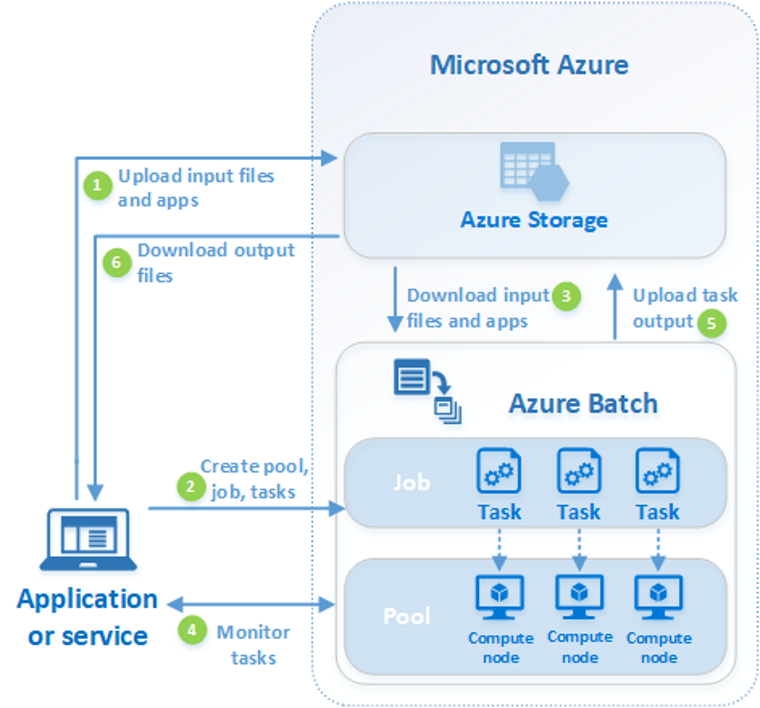

If you have a workload that needs a lot of compute for a limited amount of time, Azure Batch is a great service that is simple to use and understand. You create an Azure Batch account and create one or more pools of VMs. The VM selection is vast and includes the GPU backed SKUs, ideal for rendering and AI tasks.

From there, you create a job in which one or more tasks are created. When the job is run, the VMs work in parallel to accelerate the processing of the task(s). There are manual and auto scaling features to ensure you have sufficient compute power to complete the job in the required timeframe. Azure Batch supports the use of spot instances, which are excess capacity in Azure datacentres, sold at a fraction of the cost, with the proviso they can remove without notice if they need the resource back, which is ideal for VMs you only need to spin up when a job is being run.

Use cases for Azure batch would include data processing on huge datasets, rendering workloads, AI/ML or scientific work that require large-scale parallel and high-performance computing (HPC), which would ordinarily require organisations to have mainframe computing on premises.

Azure Service Fabric

If you are building cloud native projects that go beyond just containers, then Azure Service Fabric is a good contender to consider. It is a distributed systems platform that aims to simplify the development, deployment and ongoing management of scalable and reliable applications.

Service Fabric supports stateless and stateful microservices, so there is potential to run containerised stateful services in any language. It powers several Microsoft services including Azure SQL database, Azure Cosmos DB and Dynamics 365. As Microsoft’s container orchestrator, Service Fabric can deploy and manage microservices across a cluster of machines. It can do this rapidly, allowing for high density rollout of thousands of applications or containers per VM.

You are able to deploy Service Fabric clusters on Windows Server or Linux on Azure and other public clouds. The development environment in the Service Fabric SDK mirrors the production environment. Service Fabric integrates with popular CI/CD tools like Azure Pipelines, Jenkins and Octopus Deploy. Application lifestyle management is supported to work through the various stages of development, deployment, monitoring, management and decommissioning.

Azure Spring Apps

The Spring framework is a way of deploying Java applications in a portable environment with security and connectivity handled by the framework so deployment is quicker and simpler. With Spring, you have a solid foundation for your Java applications, providing essential building blocks like dependency injection, aspect-oriented programming (AOP), and transaction management.

Azure Spring apps provides a fully managed service, allowing you to focus on your app, whilst Azure handles the underlying infrastructure. It allows you to build apps of all types including web apps, microservices, event-driven, serverless or batch. Azure Spring apps allows your apps to adapt to changing user patterns, with auto and manual scaling of instances.

Azure Spring Apps supports both Java Spring Boot and ASP.NET Core Steeltoe apps. Being built on Azure, you can integrate easily with other Azure services such as databases, storage and monitoring. There is an Enterprise offering which supports VMware Tanzu components, baked with an SLA.

Azure Red Hat OpenShift

Red Hat OpenShift is an Enterprise ready Kubernetes offering, enhancing Kubernetes clusters in a platform that provides tools to help develop, deploy and manage your application.

Built on Red Hat Enterprise Linux and combined with the security and stability of Azure, it offers enhancements in areas like source control integration, networking features, security, a rich set of APIs and having hybrid cloud at the heart of its design.

Red Hat OpenShift is versatile and can support a number of use cases including web services, APIs, edge computing, data intensive apps, and legacy application modernisation. Being built for Enterprise use, there are a large number of Fortune 500 companies use Red Hat OpenShift – a testament to the value proposition it brings.

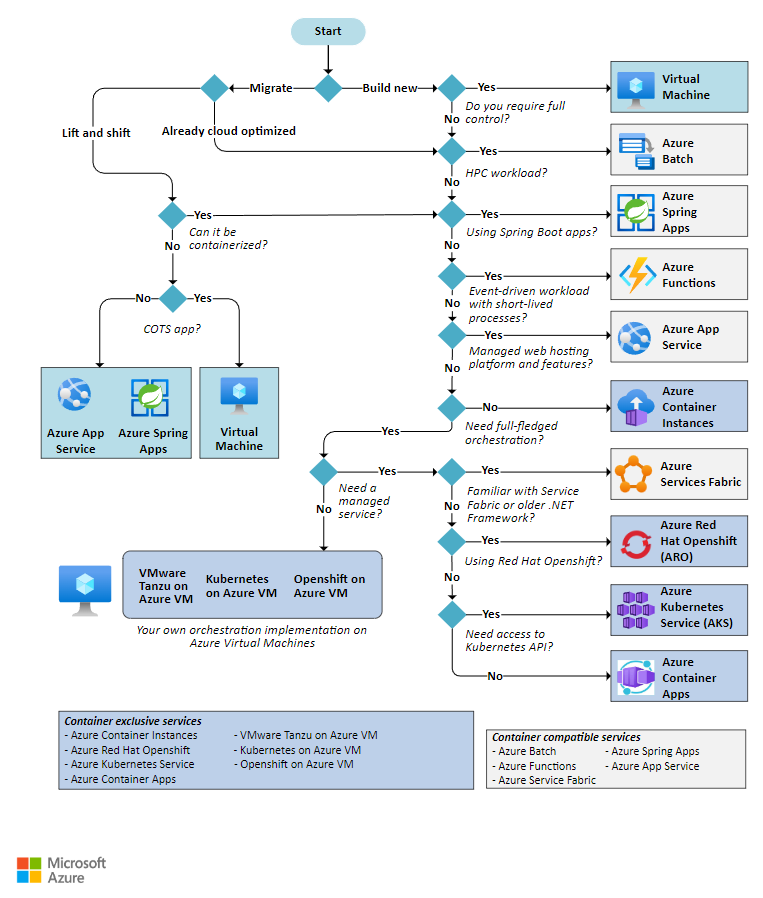

Conclusion

As we can see, there are a whole array of compute offerings within Azure. Deciding on which to use will depend on use case, cost and how the application will interact with other services or the outside world.

Sometimes the a particular compute service maybe ideal for dev/test but a different service when the app is in production. In other cases, multiple compute types maybe used for larger, more complex projects.

Take time to consider which services would satisfy your requirements, then weigh up the merits and challenges of each of them before making a decision.