What is Cosmos DB?

Microsoft describes their product: Azure Cosmos DB is a fully managed NoSQL and relational database for modern app development. Azure Cosmos DB offers single-digit millisecond response times, automatic and instant scalability, along with guaranteed speed at any scale. Business continuity is assured with SLA-backed availability and enterprise-grade security.

That is a good start but let us look at what that means in more detail. Since the explosion of the cloud and cheap storage, globally distributed, petabyte scale projects are no longer the preserve of a handful of conglomerates. Now businesses of all sizes can leverage cloud services that can open new markets and properly serve local populations in a predictable and responsive way.

For Azure, we think about read access geo-zone-redundant storage (RA-GZRS) or Azure Content Delivery Network (CDN), for front end content delivery. We think of services like Azure Front Door or Traffic Manager for load balancing. For the application, we are looking at multi region Web app deployments or Azure Kubernetes Service (AKS), deployed to more than one region. And for databases? You got it – Cosmos DB.

Cosmos DB is a cloud native Database solution which addresses many of the issues traditional database engines can suffer from when used in a cloud environment.

What problems does Cosmos DB solve?

Let us look at the issues that Cosmos DB can address, and why it is preferable to other databases in a variety of situations.

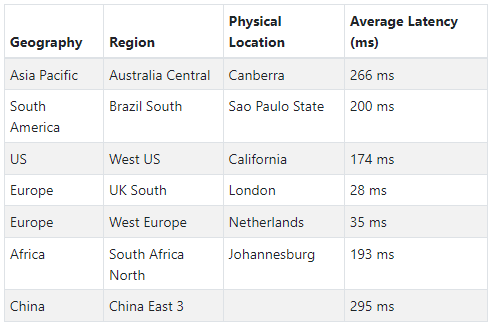

As mentioned previously, one major advantage is the globally distributed architecture that can be achieved with Cosmos DB. By putting your data as close to your users as possible, you can achieve performance that is significantly better than traditional DB solutions, which, whilst able to cluster, may suffer from issues around deadlocks and latency.

By delivering single digit millisecond reads and writes and with a robust conflict resolution feature there is real world advantages to your application responsiveness and your users will achieve predicable outcomes, even if a database is having many writes within fractions of a second of each other from multiple locations around the world.

Different use cases and volumes of data, such as order transaction or sensor data, will require differencing amounts of resources. To ensure your application is running as cost effectively as possible in the cloud whilst remaining consistently responsive is a challenge for any cloud architect. Fortunately, Cosmos DB’s ability to rapidly scale up or down resources without downtime is a baked in feature which harnesses the elasticity element of a cloud native product.

Storing data using Cosmos DB is very flexible. As well as being a schema-less design, allowing for much more flexibility at the application level, there are different models to choose from including document, key-value, graph and columnar.

Inserting, querying, and interacting with the data is achieved by default using the API for NoSQL. However, if you are migrating an application to Cosmos DB or have developers with an existing skillset, you may choose to use the API for MongoDB, PostgreSQL, Apache Casandra, Gremlin or Table.

What workloads lend themselves to using Cosmos DB?

Because of its ability to ingest a high number of records very quickly, working with streaming workloads such as IoT device’s sensor readings or financial data input. Cosmos DB is fully indexed as standard so real time analytics can occur – the results surfaced to a user or app or as part of a wider data pipeline solution can be available rapidly.

Gaming is another area which can benefit from Cosmos DB’s multi-region, fast reads and writes capabilities. With gaming, especially MMORPGs, things need to happen quickly and so anything that needs to persist, or gameplay be updated through a database, Cosmos DB is a perfect choice.

Cloud native web applications written or rearchitected for Cosmos DB can take advantage of the scalability and security that comes as standard.

How well does Cosmos DB integrate with other Azure products?

Any Azure hosted application could be developed to work with Cosmos DB. Microsoft provides SDKs for multiple programming languages including .NET/.NET core, Java, Node.js, Python and Go. Or you can develop in a language of your choosing by building for the Cosmos DB REST API. It does not matter if you are using VMs, Azure Container Instances (ACI), Azure Kubernetes Service (AKS), or App Service. Whatever your requirements in terms of hosting and language, Cosmos DB can fit.

But what about plug-in connectivity to other Azure Features? Well, Microsoft identifies where Azure services will be commonly connected and as such makes provision to make some services work with a Cosmos DB account with a few simple parameters set. Let us look at some of those.

Azure Function Apps are a great serverless technology that can run code based on triggers. It is very cost effective and allows for a lot of flexibility where some process does not require always on compute. You can create bindings in Azure Functions that allow Cosmos DB to store output from the resulting function or provision an input binding for a function app to retrieve a document to do some processing with or instruct another app based on the document contents.

Azure Synapse Analytics can ingest data from Cosmos DB and perform transformations on it and store in a data lake or external table. Data can also be analysed in real time using Synapse Analytics or Azure Stream Analytics, which is a serverless tool which can process the data at scale and with ML capabilities.

Use indexers in Azure Cognitive Search to take imported data from Cosmos DB and provide semantic search capabilities.

What are some of the coolest features of Cosmos DB?

We have now gone through what I hope you will agree are cool features including the ability to work with multiple APIs such as Gremlin and Casandra and use multiple data models such as document, graph, and key values.

We have also noted that indexing is performed automatically for fast query performance out of the box, and that the platform is designed to allow the data to be globally distributed to improve reliability and reduce latency. But there is more!

Cosmos DB comes with enterprise level security in mind. Security is not only cool, but essential. Authorisation and authentication can be managed by Microsoft’s Entra ID, and network access can be restricted to specific Azure virtual network, or you could configure Private Link.

What about the change feed? It is mentioned a lot in Cosmos DB. What is it and what can applications do with it?

The change feed is a record of changes made in the container. It supports most of the Cosmos DB APIs, namely NoSQL, Cassandra, MongoDB, and Gremlin. It is used for tools such as Functions to create triggers based on updates to data including when a record has been deleted.

The change feed can be used to perform incremental Extract, Transform, Load (ETL) processing by informing the sink’s processing tool of new or updated data being available. It could also be used for real time stream processing or could help with actions to trigger IoT device updates based on some condition that can occur that the change feed projects.

Why are there consistency levels in Cosmos DB and what are the different levels?

When planning an app that can be used over a wide geographic area, potentially the globe or (even into space), stakeholders need to consider the trade-offs between all users seeing the exact same data, which can take some time, verses just getting the data loaded with as little latency as possible. Even with fast CPUs, storage, routers and efficient code, the laws of physics play a part and so the consistency level should be carefully considered when looking at a multi-region deployment.

The 5 levels from most consistent to least are Strong, Bounded staleness, Session, Consistent prefix and Eventual.

Strong will not make the data readable until the data is committed to all regions.

Bounded staleness works for two or more regions and works best for a single region write. The developer sets the maximum number of versions or time intervals for a region and if the configured staleness value is exceeded, writes for the affected partition are throttled until staleness is within the configured upper bound.

The default consistency is Session which, by way of session token ensures the user or application always reads its own writes. If a client did not write that data, the data is read with eventual consistency.

Consistent prefix ensures that updates that are made as a single document (I.e., they share the same prefix) are always visible together. For single document updates, they will see eventual consistency.

The weakest form of consistency is Eventual. This is where there are no ordering guarantees to the data a client is being read, so the experience is not affected by waiting for globally committed writes, but the trade-off is not having ordered information which would be selected when that is not a requirement.

Which companies use Cosmos DB?

Checking Stackshare, there are some well-known brands included in the roll call of those harnessing the power and flexibility afforded through Cosmos DB. Microsoft, Daimler, ASOS, Airbus, Rumble, and many others are known users of this solution.

OK I am sold! Have you any top Cosmos DB tips?

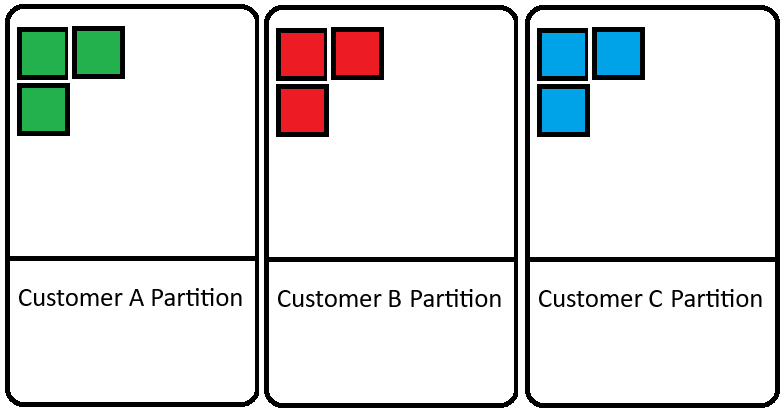

To spread load and allow your database to scale, Cosmos DB uses a partition key to break your data into logical partitions. Those logical partitions are distributed over several physical partitions depending on your data size which Cosmos DB takes care of. Choosing the correct partition key is one of the most important decisions you make when setting up your container. You need to make sure you choose something that will be a fair distribution of data.

For example, if you were building an ecommerce solution, let us consider the customer record and their associated addresses, orders, and wish lists. Having data partitioned by account created date would be a poor choice. You are keeping a single partition (current date) “hot” with little to no data being added to other dates. This does not help Cosmos DB with scaling and can cause performance issues.

In our eCommerce example, a customer ID may be a desirable choice as the spread of orders, addresses, invoices etc. can be distributed amongst customers. Regular queries should be considered as well because complex queries (measured in Request Units or RUs) are more expensive because they will use more RUs. Look at common queries your business will need to make – if you can align your partitions and what you regularly query (for example country), it may save you some money.

Denormalisation is the opposite of… normalisation! Normalisation is breaking out data into separate tables (such as customers and orders) for helping reduce redundant (duplicate) data. It keeps database sizes in check and helps reduce issues updating large records.

So why would you want to denormalise (embed the customer record and their orders) in a NoSQL design? Well, it stems from reducing the RUs again. Yes, there must be some thought process to appending the record but reducing the complexity of queries for cost and performance purposes. It is not always a sound strategy, and you will need to check your use case. But for one to few records like our customer and their orders, it is considered best practice.

Where can I learn more about Cosmos DB?

I will always start with Microsoft Docs – following a certification learning path for a full overview of the product if there is one, or its heavily featured in one of them. Fortunately, Cosmos DB does get a dedicated certification – DP-420: Azure Cosmos DB Speciality. I have done this certification, and it is an excellent way of making sure you have the skills to work with Cosmos DB.

Beyond Microsoft Docs, it is a sensible idea to familiarise yourself with the costs of a project before deploying. The Azure Pricing Calculator can give you some price estimates based on what you tell it about your locations required, the size of the data you intend to store and if you are planning to use reserved capacity to save money.